Why this matters now

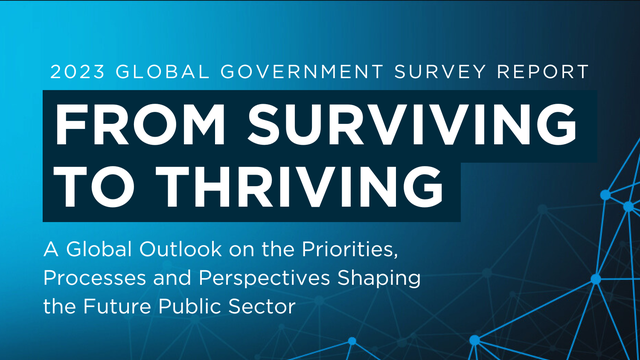

Australia has set itself a target to leverage AI to enhance Government services. Internationally, despite heavy spending, most AI pilots fail to deliver measurable transformation. The recently released MIT Report on State of AI in Business 2025 is essential reading for public-sector leaders, as it exposes the stark reality that 95% of Generative AI pilots yield no return, while only 5% achieve real impact.

The report’s findings are sobering. Failure isn’t driven by regulation or model quality—it’s caused by tools that don’t retain learning, adapt to context, or integrate with workflows. For governments—where accountability, compliance, and explainability are non-negotiable—this evidence underscores the need for a different path.

Why hybrid AI is the right approach for Government

Hybrid AI combines the pattern recognition power of machine learning with the explainability and control of symbolic AI (rules, policies, ontologies). This duality directly addresses the challenges surfaced by MIT’s research:

- Explainability for accountability: every automated step can be traced back to rules aligned with legislation, FOI, and administrative law.

- Learning that lasts: Hybrid AI retains validated knowledge through taxonomies and ontologies.

- Workflow fits symbolic constraints: Enforcing process integrity in regulated domains (records, grants, compliance).

- Safety by design: sensitive data flows are controlled; decisions can be audited; model drift is contained.

In short, hybrid AI bridges the “GenAI Divide” identified in the MIT report by turning fragile pilots into trusted, outcome-driven systems.

Unstructured data in the APS

Before the APS can unlock the value of hybrid AI, it must confront the reality of its unstructured data—millions of documents, records, and transcripts scattered across systems, each bound by regulations and accountability. To transform this challenge into an asset, agencies must focus on:

- Ability to access information from trusted internal documents with legal lineage.

- Provenance and version control to ensure every decision is defensible.

- Integration with case and record systems, rather than standalone AI silos.

- Controlled learning loops to capture staff feedback safely.

These factors align with the MIT report’s observation that the core barrier is learning, not infrastructure or regulation

The importance of accurate information extraction

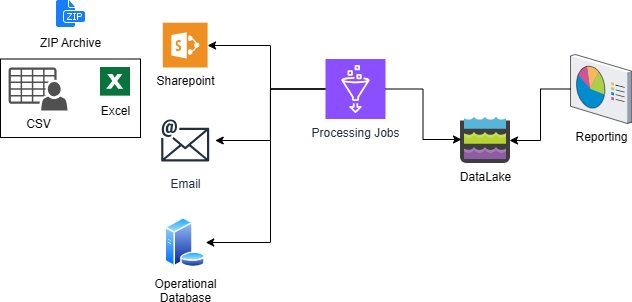

At the heart of AI in government is a simple truth: all advanced analytics, decision support, and automation depend on accurate information extraction from unstructured data. Hybrid AI techniques combine to provide a mechanism for transforming raw, unstructured data into structured, trusted information.

Single source of truth: Extraction pipelines ensure that data from reports, legislation, correspondence, and case files is standardised into a single authoritative source.

- Foundation for every AI application: Whether the goal is predictive analytics, regulatory monitoring, or case triage, the quality of outputs is only as strong as the quality of extracted data.

- Error reduction: By combining ML for entity recognition with symbolic validation against rules and ontologies, hybrid AI minimises false positives/negatives that could otherwise derail compliance.

- Scalability: Once structured, information can feed multiple downstream systems (case management, risk engines, FOI workflows) without redundant reprocessing.

- Trust and explainability: Symbolic layers ensure that every extracted fact can be traced back to its source and validated, which is critical for audit and public accountability.

For governments, accurate extraction is not a technical detail but a strategic requirement: it is the prerequisite for creating high-quality, reusable datasets that underpin all AI-enabled transformation. How many models do you want to retrain, with associated costs, to keep pace with evolving language?

Government use cases where hybrid AI delivers

The best way to understand the value of hybrid AI is to see it in action. Across government domains, hybrid approaches have already shown that combining machine learning with symbolic rules can accelerate transformation while preserving legal and ethical standards:

- Regulatory change tracking: ML detects changes; symbolic AI maps them to obligations and workflows.

- Grants and claims triage: ML extracts and classifies; symbolic AI enforces eligibility and fairness rules.

- Case summarisation with legal triggers: ML condenses records; symbolic AI flags statutory considerations.

- FOI requests: ML locates sensitive spans; symbolic AI enforces secrecy and exemption provisions.

- Records retention & disposition: ML classifies archives; symbolic AI applies retention schedules.

Each illustrates how hybrid AI converts unstructured data into trusted, auditable outcomes—exactly the gap identified in the MIT analysis.

Why leaders should read the MIT AI Report

The MIT AI Report 2025 is one of the most comprehensive field studies of AI adoption to date, based on 300+ implementations, 52 structured interviews, and surveys of 153 senior leaders

For government executives, the report:

- Debunks hype by showing why most GenAI pilot projects stall.

- Provides evidence that outcomes come from systems that learn and integrate—not flashy demos.

- Highlights urgency: the “window to cross the GenAI Divide” is closing as vendors and agencies lock in learning-capable systems

By pairing its insights with hybrid AI, governments can modernise faster—while staying within the guardrails of regulation, safety, and public trust.

Published by

About our partner

Readan AI

Raedan AI is an Australian specialist integrator and distributor of expert.ai’s hybrid AI platform, focused on delivering trusted, explainable, and compliant AI solutions for the Australian Government and regulated industries.With a foundation in data management expertise, Raedan AI helps agencies bridge the gap between AI innovation and governance discipline. Its approach ensures that AI systems are not only robust but also semantically grounded, interoperable, and auditable — meeting the demands of legislation, policy, and public accountability.The core strengths of the end-to-end solutions leveraging on expert.ai are:Hybrid AI Leadership: Combining symbolic reasoning and machine learning for explainability, compliance, and scalability.Data Management Integration: Taxonomies, ontologies, metadata, and reference data embedded into AI solutions.Government Alignment: Solutions designed to meet the AI Assurance Framework, FOI obligations, records management, and transparency requirements.Portfolio Relevance: Out-of-the-box modules (regulatory tracking, risk management, claims automation, clinical trials, knowledge harvesting) tailored for Defence, Health, Planning, Justice, and Human Services.Leveraging 35 years of experience in natural language understanding and over 300 clients in Europe and the US. expert.ai ensures that AI outputs are explainable, defensible, and aligned to Australian standards. Out-of-the-box solutions include:Regulatory Tracking: Monitor legislation, policy updates, and compliance obligations.Third-Party Risk Management: Automate due diligence on suppliers, contractors, and partners.Claims Automation: Reducing the process times and increasing accuracy for grants and insurance claims.Risk Engineering: Assist infrastructure, transport, and energy regulators in assessing safety and compliance risks.Email Classification: Automate routing in citizen correspondence.Content Discovery: Unlock hidden insights in unstructured data repositories.

Learn more